Based on Apache Spark 1.6.0

Apache Spark provides a special API for stream processing. This allow s user to write streaming jobs as they write batch jobs. Currently supports Java, Scala and Python.

Spark Streaming follows the “micro-batch” architecture . Spark Streaming receives data from various input sources and groups it into small batches.each batch is created in a particular time Interval. At the beginning of each time interval a new batch is created, and any data that arrives during that interval gets added to that batch. At the end of interval the batch is completed. User can define the time Interval by the argument called batch Interval. The batch interval is typically between 500 milliseconds and several seconds, as configured by the application developer. Each input batch forms an RDD, and is processed using Spark jobs to create other RDDs.

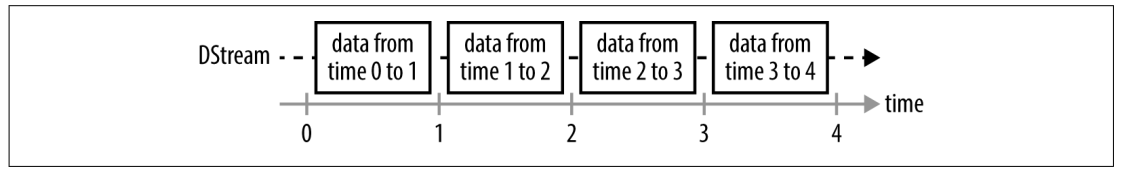

Spark Streaming provides an abstraction called DStreams, or discretized streams. A DStream is a sequence of data arriving over time. Internally, each DStream is represented as a sequence of RDDs arriving at each time step.

Here as shown in above image batch interval is defined for 1 Second, So every second new RDD is created and DStream represent this Sequence of RDDs.

For Fault Tolerant Data received is copied to two nodes so Spark Streaming can tolerate single worker failure. Spark Streaming also introduced a mechanism called checkpointing that saves the state periodically to a file system (like HDFS or S3). User may setup these checkpoints every 5-10 batches of data. So, In case of failure Spark Streaming resume from last checkpoint.

Transformations apply some operation on current DStream and generate a new DStream.

Transformations on DStreams can be grouped into either stateless or stateful:

In stateless transformations the processing of each batch does not depend on the data of its previous batches.

Stateful transformations, in contrast, use data or intermediate results from previous batches to compute the results of the current batch. They include transformations based on sliding windows and on tracking state across time.

stateless transformations is simple as you apply to RDDs previously .But for stateful transformations data of current batch is dependent on previous batches.

The two main types of Stateful Transformation are:

Windowed Operations

UpdateStateByKey

Windowed Operations perform operation across a longer time period rather than on a single batch interval, it combines the result from multiple batches. All the windowed operations takes two parameters the one is window duration and the other is sliding duration . Both must be multiple of batch interval.

window duration controls the how many previous batches are consider for operation and sliding duration which defaults to the batch interval, controls how frequently the new DStream computes results.

val lines = streamingContext.socketTextStream("localhost", 9999)

val errorLines = lines.filter(_.contains("error"))

errorLines.window(Seconds(30), Seconds(10))

UpdateStateByKey help us maintaining state across the batches by providing access to the state variable for DStreams of key/value pair. Given a DStream of (key, event) pairs, it lets you construct a new DStream of (key, state) pairs by taking a function that specifies how to update the state for each key given new events. To use UpdateStateByKey update(events, oldState) is provided that takes in the events that have arrived for a key and its previous state, and returns a newState to store for it The result of updateStateByKey() will be a new DStream that contains an RDD of (key, state) pairs on each time step.

val lineWithLength = lines.map{line => (line, line.length)}

lineWithLength.updateStateByKey(someFunction)

Output Operation or Action specify what needs to be done with the final transformed data in a stream and are similar as of RDDs . There is one common debugging output operation named print() this prints the first 10 elements from each batch of DStream.

Some Tips

- Minimum batch size Spark Streaming can use.is 500 milliseconds, is has proven to be a good minimum size for many applications.

- The best approach is to start with a larger batch size (around 10 seconds) and work your way down to a smaller batch size.

- Receivers can sometimes act as a bottleneck if there are too many records for a single machine to read in and distribute. You can add more receivers by creating multiple input DStreams, and then applying union to merge them into a single stream

- If receivers cannot be increased anymore, you can further redistribute the received data by explicitly repartitioning the input stream using DStream.repartition.

You can find the video For the same below

Reblogged this on Prabhat Kashyap – Scala-Trek.