In this blog we will install and start a single-node, latest and recommended version of kafka ie 0.10.2.0 with the binary for Scala 2.12 on the EC2 Linux instance with centOS as its operating system. We would be using the t2.micro (free tier) instance which comes with 1 GB RAM and 8 GB SSD.

Prerequisite ->

1). Create an EC2 instance ->

Steps for creating an AWS instance are clearly mentioned in official AWS docs, check here.

2). Install java 8 ->

Since we would be working with the Kafka binary for Scala 2.12, our instance must have java 8. By default the ec2 instances have java 7. You may check and upgrade the java version to 8 on your instance by following the steps here.

After installing java 8, follow the steps mentioned below in sequence to start Kafka on your instance.

Step 1 -> Downloading and Extracting kafka

Download kafka_2.12-0.10.2.0.tgz

wget http://mirror.fibergrid.in/apache/kafka/0.10.2.0/kafka_2.12-0.10.2.0.tgz

Extract the .tgz file

tar -xzf kafka_2.12-0.10.2.0.tgz

Since the zip is of no use now, we remove it :

rm kafka_2.12-0.10.2.0.tgz

Step 2 -> Starting zookeeper

Since Kafka uses zookeeper, we need to first start a zookeeper server. We can use the convenience script packaged with Kafka to start a single-node zookeeper instance or we can start zookeeper on a standalone instance and specify its configurations in zookeeper.properties configuration file, we would be starting it using the convenience script that is packaged with Kafka. Since we have 1 GB RAM we would be setting KAFKA_HEAP_OPTS environment variable in our .bashrc to 50% of total RAM ie 500 MB in our case.

vi .bashrc

Insert following environment variable

export KAFKA_HEAP_OPTS="-Xmx500M -Xms500M"

After setting the variable, source your .baschrc

source .bashrc

Start Zookeeper by the following command in background using nohup and divert its logs in zookeeper-logs file

cd kafka_2.12-0.10.2.0 nohup bin/zookeeper-server-start.sh config/zookeeper.properties > ~/zookeeper-logs &

Then press ctrl+d to log out of the instance.

Ssh to your instance again and check the content of zookeeper-logs file. It must look like :

NOTE -> In case the content of of zookeeper-logs file is different, try freeing the RAM buffers/cache by following commands and re-run the cd and nohup command mentioned above (May happen as t2.micro instance comes with quite less RAM, unlikely to happen with bigger instances)

sudo sh -c 'echo 1 >/proc/sys/vm/drop_caches' sudo sh -c 'echo 2 >/proc/sys/vm/drop_caches' sudo sh -c 'echo 3 >/proc/sys/vm/drop_caches'

Step 3 -> Starting Kafka

After successfully staring Zookeeper its now time to start Kafka via following command.

cd kafka_2.12-0.10.2.0 nohup bin/kafka-server-start.sh config/server.properties > ~/kafka-logs &

Then press ctrl+d to log out of the instance.

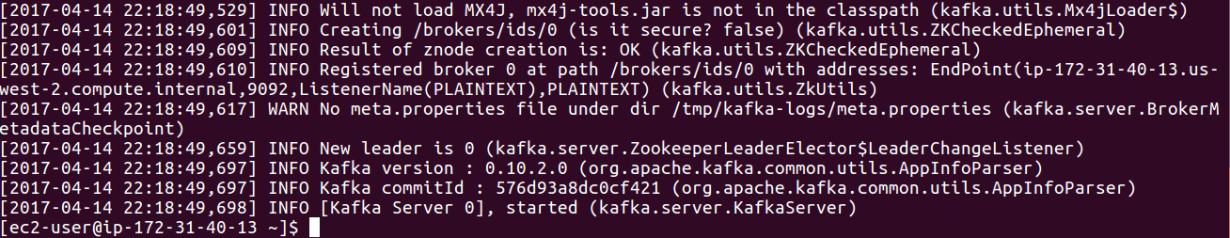

Ssh to your instance again and check the content of Kafka-logs file. It must look like :

This successfully starts Kafka on your ec2 instance. You may access your Kafka-server via Kafka-scala or Kafka-java api by making required changes in the security groups. To stop Kafka and zookeeper, enter following commands.

bin/kafka-server-stop.sh bin/zookeeper-server-stop.sh

Hope the blog helps you. Comments and suggestions are welcomed.

This requires too much maintainence and an extended resource for monitoring , do we have a plug and play feature for this. ? Like we do have for Cassandra ?

Hey, Amazon Kinesis could be one feasible option for your use case .Please refer here https://aws.amazon.com/kafka/ . Kinesis provides easy maintenance and could be easily used via its aws api’s but is a bit expensive than starting and maintaining Kafka on EC2 on your own and since I have not personally used Kinesis so I am not sure its as rich as Kafka in functionalities.

Thanks for the blog. Have you tried to enable SSL for kafka broker with signed certificate?

Hi Sahil, if we have 4 node cluster should we follow above steps in all 4 nodes and start broker on master node and consume in next 3 nodes?, is this assumption correct

Hello, the Kafka download link is broken. I tried to use wget escaping the official Kafka binary URL link in almost every way but the “?” character is resulting in a broken URL download link. Could you help me to download it? Right now I am trying to find another mirror link because I can’t make wget work properly.