In this blog, we will discuss What is RDD partitioning, why Partitioning is important and how to create and use spark Partitioners to minimize the shuffle operations across the nodes in a distributed Spark application.

What is Partitioning?

Partitioning is a transformation operation which is available on all key value pair RDDs in Apache Spark. It is required when we try to group values on the basis of similarity of their keys. The similarity of keys can be defined by a function.

Why is it Important?

Partitioning has great importance when working with key value pair RDDs. For example aggregating values for certain keys in a distributed RDD element would required fetching values from other nodes before computing final aggregate result per key. That is what we call a “Shuffle Operation” in spark. Locality of a group of elements with similar values on the same node reduces communication costs.

How to apply a Partitioner on pair RDD

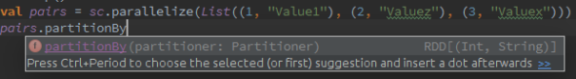

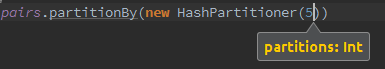

Transformation .partitionBy is used to specify a partition on a pair RDD specifying numOfPartitions in the Partitioner object.

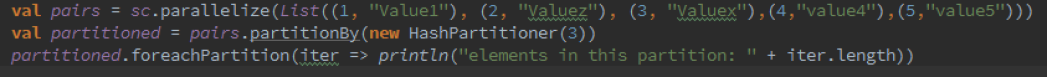

Creating a pair RDD of [Int,String] and partitioning it by HashPartitioner with 3 partitions. The HashPartitioner groups similar values in same partition according to the function.

Let us see the result of partition by looking into count of values into each partition. Here number of partitions in HashPartitioner is 3 and total values in RDD is 5. After counting the values into each partition we get:

When we get benefits of Partitioning

When an RDD is partitioned by the previous transformation with the same Partitioner, the shuffle will be avoided on at least one RDD and will reduce communication cost. Following is the list of some of the transformations which will benefit from pre-partitioned RDDs.

- join()

- cogroup()

- groupWith()

- leftOuterJoin()

- rightOuterJoin()

- groupByKey()

- reduceByKey()

- combineByKey()

When a Partition information is not preserved?

Not all transformation preserves the partition information. Calling map() on a hash-Partitioned key-value RDD, it is not guaranteed that the partition information will available in resultant RDD, since the function passed in a map transformation can change the key of elements in the RDD and that would be inconsistent.

How to preserve Partition Information?

Using transformations mapValues() and flatMapValues() instead of map() and flatMap() on a pair RDD will only transform the values of RDDs keeping keys intact. These transformations only pass values to your function definition.

Reblogged this on knoldermanish.