What is Perceptron?

In machine learning, the perceptron is an algorithm for supervised learning of binary classifiers. It is a type of linear classifier, i.e. a classification algorithm that makes its predictions based on a linear predictor function combining a set of weights with the feature vector.

Linear classifier defined that the training data should be classified into corresponding categories i.e. if we are applying classification for the 2 categories then all the training data must be lie in these two categories.

Binary classifier defines that there should be only 2 categories for classification.

Hence, The basic Perceptron algorithm is used for binary classification and all the training example should lie in these categories. The basic unit in the Neuron is called the Perceptron.

Origin :-

The perceptron’s algorithm was invented in 1957 at the Cornell Aeronautical Laboratory by Frank Rosenblatt, funded by the United States Office of Naval Research. It was intended to be a machine, rather than a program, and while its first implementation was in software for the IBM 704, it was subsequently implemented in custom-built hardware as the “Mark 1 perceptron“. This machine was designed for image recognition: it had an array of 400 photocells, randomly connected to the “neurons“. Weights were encoded in potentiometers, and weight updates during learning were performed by electric motors.

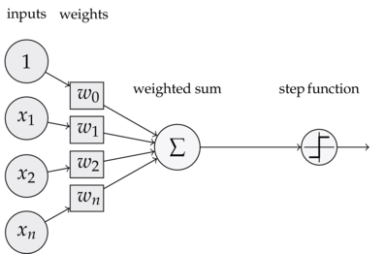

Components :- Following are the major components of a Perceptron

-

- Input:- All the feature becomes the input for a perceptron. We denote the input of a perceptron by [x1, x2, x3, ..,xn], here x represent the feature value and n represent the total number of features. We also have special kind of input called the BIAS. In the image, we have described the value of bias as w0.

- Weights:- Weights are the values that are computed over the time of training the model. Initial we start the value of weights with some initial value and these values get updated for each training error. We represent the weights for perceptron by [w1,w2,w3,.. wn].

- BIAS:- A bias neuron allows a classifier to shift the decision boundary left or right. In an algebraic term, the bias neuron allows a classifier to translate its decision boundary. To translation is to “move every point a constant distance in a specified direction”.BIAS helps to training the model faster and with better quality.

- Weighted Summation:- Weighted Summation is the sum of value that we get after the multiplication of each weight [wn] associated the each feature value[xn]. We represent the weighted Summation by ∑wixi for all i -> [1 to n]

- Step/Activation Function:- the role of activation functions is make neural networks non-linear.For linerarly classification of example, it becomes necessary to make the perceptron as linear as possible.

- Output:- The weighted Summation is passed to the step/activation function and whatever value we get after computation is our predicted output.

Inside The Perceptron:-

Description:-

- Fistly the features for an examples given as input to the Perceptron,

- These input features get multiplied by corresponding weights [starts with initial value].

- Summation is computed for value we get after multiplication of each feature with corresponding weight.

- Value of summation is added to bias.then,

- Step/Activation function is applied to the new value.

Refrences:- Perceptron The most basic form of Neual Network

Reblogged this on Coding, Unix & Other Hackeresque Things.