In This Blog We Will Create a very simple application with Play FrameWork And Spark.

Apache Spark is a fast and general-purpose cluster computing system. It provides high-level APIs in Scala, Java, and Python that make parallel jobs easy to write, and an optimized engine that supports general computation graphs. It also supports a rich set of higher-level tools including Shark and Spark Streaming.

Play on other hand is a lightweight, stateless, web-friendly architecture. It is built on Akka and provides predictable and minimal resource consumption (CPU, memory, threads) for highly-scalable applications.

Now we will start building an application using the above technologies.

We will try to create a Spark Application which is built in Play 2.5. We can build it in any Play version.

First of all, download the latest activator version using these steps,create a new play-scala project.

knoldus@knoldus:~/Desktop/activator/activator-1.3.12-minimal$ activator new Fetching the latest list of templates... Browse the list of templates: http://lightbend.com/activator/templates Choose from these featured templates or enter a template name: 1) minimal-akka-java-seed 2) minimal-akka-scala-seed 3) minimal-java 4) minimal-scala 5) play-java 6) play-scala (hit tab to see a list of all templates) > 6 Enter a name for your application (just press enter for 'play-scala') > play-spark OK, application "play-spark" is being created using the "play-scala" template

import your project in intellij,inside your built.sbt file add following dependencies

libraryDependencies ++= Seq(

jdbc,

cache,

ws,

"org.scalatestplus.play" %% "scalatestplus-play" % "1.5.1" % Test,

"com.fasterxml.jackson.core" % "jackson-core" % "2.8.7",

"com.fasterxml.jackson.core" % "jackson-databind" % "2.8.7",

"com.fasterxml.jackson.core" % "jackson-annotations" % "2.8.7",

"com.fasterxml.jackson.module" %% "jackson-module-scala" % "2.8.7",

"org.apache.spark" % "spark-core_2.11" % "2.1.1",

"org.webjars" %% "webjars-play" % "2.5.0-1",

"org.webjars" % "bootstrap" % "3.3.6",

"org.apache.spark" % "spark-sql_2.11" % "2.1.1",

"com.adrianhurt" %% "play-bootstrap" % "1.0-P25-B3"

)

libraryDependencies ~= { _.map(_.exclude("org.slf4j", "slf4j-log4j12")) }

Add these routes inside your routes file in conf package.

# Routes # This file defines all application routes (Higher priority routes first) # ~~~~ # Home page # Map static resources from the /public folder to the /assets URL path GET /assets/*file controllers.Assets.versioned(path="/public", file: Asset) GET /webjars/*file controllers.WebJarAssets.at(file) GET /locations/json controllers.ApplicationController.index

Next you need to create a bootstrap package inside your app folder and create a class inside it which will be the first class to be loaded in project and it will initialize the sparksession.

package bootstrap

import org.apache.spark.sql.SparkSession

import play.api._

object Init extends GlobalSettings {

var sparkSession: SparkSession = _

/**

* On start load the json data from conf/data.json into in-memory Spark

*/

override def onStart(app: Application) {

sparkSession = SparkSession.builder

.master("local")

.appName("ApplicationController")

.getOrCreate()

val dataFrame = sparkSession.read.json("conf/data.json")

dataFrame.createOrReplaceTempView("godzilla")

}

/**

* On stop clear the sparksession

*/

override def onStop(app: Application) {

sparkSession.stop()

}

def getSparkSessionInstance = {

sparkSession

}

}

To make sure that this class is global in root package inside your application.conf add these lines

# This is the main configuration file for the application. # ~~~~~ # Secret key # ~~~~~ # The secret key is used to secure cryptographics functions. # If you deploy your application to several instances be sure to use the same key! application.secret="AuAS5FW52/uX9Uy]TDBWwG6e@A=X2bFtv2q_I>6<t@Y[VtJtTGXQEXoU5BouE]rk" # The application languages # ~~~~~ application.langs="en" # Global object class # ~~~~~ # Define the Global object class for this application. # Default to Global in the root package. application.global= bootstrap.Init

Inside your controller package, add a new controller and name it as ApplicationController this controller will read data from a json file using sparksession and then query on the data and later convert that data to json and send it to the view.

package controllers

import javax.inject.Inject

import scala.concurrent.Future

import org.apache.spark.sql.{DataFrame, SparkSession}

import play.api.i18n.MessagesApi

import play.api.libs.json.Json

import play.api.mvc.{Action, AnyContent, Controller}

import bootstrap.Init

class ApplicationController @Inject()(implicit webJarAssets: WebJarAssets,

val messagesApi: MessagesApi) extends Controller {

def index: Action[AnyContent] = { Action.async {

val query1 =

s"""

SELECT * FROM godzilla WHERE date='2000-02-05' limit 8

""".stripMargin

val sparkSession = Init. getSparkSessionInstance

sparkSession.sqlContext.read.json("conf/data.json")

val result: DataFrame = sparkSession.sql(query1)

val rawJson = result.toJSON.collect().mkString

Future.successful(Ok(Json.toJson(rawJson)))

}

}

}

Now we are all set to start with our play application.

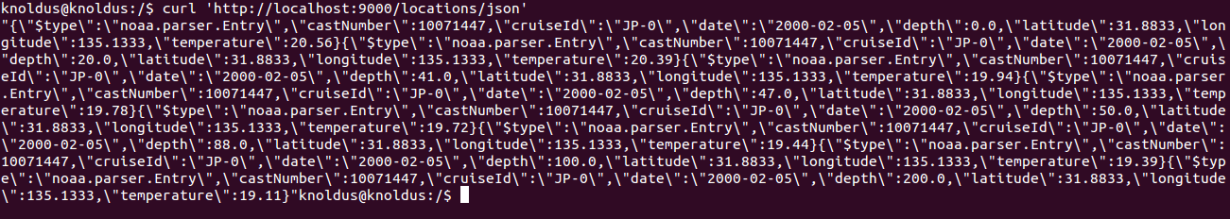

using activator run you can run your application and to query on the data you can use below curl request.

You can find the working demo available here.

happy coding i hope this blog will help