In this blog, we will be starting with Introduction to Apache Druid and will focus the discussion on the Druid SQL query model and will demonstrate various ways in which we can query Druid for SQL workloads.

What is Druid?

I would not mind quoting the Druid documentation for this purpose: “Druid is a data store designed for high-performance slice-and-dice analytics (“OLAP“-style) on large data sets. Druid is most often used as a data store for powering GUI analytical applications, or as a backend for highly-concurrent APIs that need fast aggregations.”

You might be wondering where is “SQL” in that? Actually, the fact is Druid is designed for special kind of SQL workloads which we can relate with powering the GUI analytical applications which require low latency query response. But in this post, we will only look in the “how part” of it using Druid to quickly run queries.

Where to use Druid?

What those use cases can be where we can leverage the power of Apache Druid? Below are a few use cases where Druid fits bests:

- Clickstream analytics

- Network flow analytics

- Server metrics storage

- BI/OLAP

As you can see in the first two use cases rely on the event-based queries. Apache Druid natively supports time series data, Druid is optimized for data where a timestamp is present. Druid partitions data by time, so the queries that include a time filter will be significantly faster than those that do not. Before we dive deep into the use cases and arguments that support how Druid fits best for the use cases, Let us go through the introductory steps which focus on “How” part of Druid SQL.

Setting up Query Data

Prior to query, let us look at the schema of data we will be using to query Druid. The data is Wikipedia Edits History which is basically part of the basic tutorial datasets and you can find it in the {DRUID_BINARY}/quickstart/tutorial directory. Below is an example record:

{

"timestamp":"2015-09-12T20:03:45.018Z",

"channel":"#en.wikipedia",

"namespace":"Main",

"page":"Spider-Man's powers and equipment",

"user":"foobar",

"comment":"/* Artificial web-shooters */",

"cityName":"New York",

"regionName":"New York",

"regionIsoCode":"NY",

"countryName":"United States",

"countryIsoCode":"US",

"isAnonymous":false,

"isNew":false,

"isMinor":false,

"isRobot":false,

"isUnpatrolled":false,

"added":99,

"delta":99,

"deleted":0,

}Prerequisites Before Ingesting Data

I assume you’ve already downloaded the Druid binary package if not, you can quickstart steps and execute the below commands to bring druid processes to life.

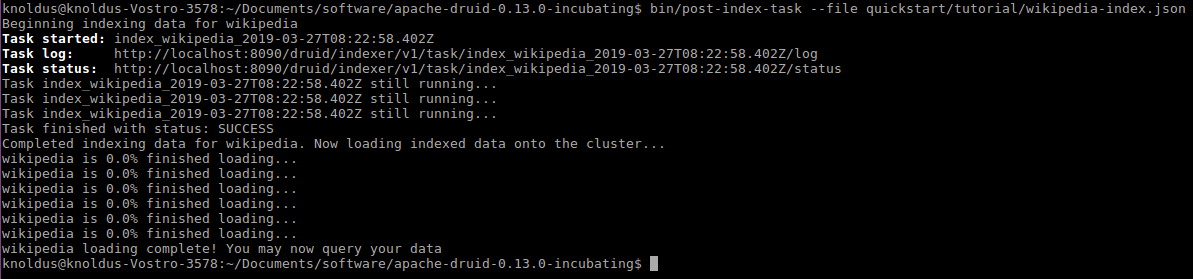

Once the Druid processes are running, execute the be below ingestion job mention on the docs to get the data available for querying.

Once the data is available for querying, we can start with the Druid client with the Queries.

How to Query Druid?

There are two ways we can query the Druid:

- Client APIs: JSON over HTTP (Native JSON queries)

Queries can be made using an HTTP REST style request to queryable nodes. Nodes can be either A Broker, Historical or A Peon. Please refer to architecture if you would like to know the details of these terms. Here is an example query defined at quickstart/tutorial/wikipedia-top-pages.json in druid bin package.

curl -X 'POST' -H 'Content-Type:application/json' -d @quickstart/tutorial/wikipedia-top-pages.json http://localhost:8082/druid/v2?pretty

Results:

[ {

"timestamp" : "2015-09-12T00:46:58.771Z",

"result" : [ {

"count" : 33,

"page" : "Wikipedia:Vandalismusmeldung"

}, {

"count" : 28,

"page" : "User:Cyde/List of candidates for speedy deletion/Subpage"

}, {

"count" : 27,

"page" : "Jeremy Corbyn"

}, {

"count" : 21,

"page" : "Wikipedia:Administrators' noticeboard/Incidents"

}, {

"count" : 20,

"page" : "Flavia Pennetta"

}, {

"count" : 18,

"page" : "Total Drama Presents: The Ridonculous Race"

}, {

"count" : 18,

"page" : "User talk:Dudeperson176123"

}, {

"count" : 18,

"page" : "Wikipédia:Le Bistro/12 septembre 2015"

}, {

"count" : 17,

"page" : "Wikipedia:In the news/Candidates"

}, {

"count" : 17,

"page" : "Wikipedia:Requests for page protection"

} ]

} ]The above query is an example of a Native JSON query supported by Druid which will fetch 10 records of most edited Wiki Pages. The attribute “queryType” is topN which returns a sorted set of records based on some criteria.

2. dsql client

A client can also be used to run queries. The client is provided by Druid as bin/dslq inside Druid binary.

knoldus@knoldus-Vostro-3578:~/Documents/software/apache-druid-0.13.0-incubating$ ./bin/dsql

Welcome to dsql, the command-line client for Druid SQL.

Type "\h" for help.

dsql> SELECT page, COUNT(*) AS Edits FROM wikipedia WHERE "__time" BETWEEN TIMESTAMP '2015-09-12 00:00:00' AND TIMESTAMP '2015-09-13 00:00:00' GROUP BY page ORDER BY Edits DESC LIMIT 10;

┌──────────────────────────────────────────────────────────┬───────┐

│ page │ Edits │

├──────────────────────────────────────────────────────────┼───────┤

│ Wikipedia:Vandalismusmeldung │ 33 │

│ User:Cyde/List of candidates for speedy deletion/Subpage │ 28 │

│ Jeremy Corbyn │ 27 │

│ Wikipedia:Administrators' noticeboard/Incidents │ 21 │

│ Flavia Pennetta │ 20 │

│ Total Drama Presents: The Ridonculous Race │ 18 │

│ User talk:Dudeperson176123 │ 18 │

│ Wikipédia:Le Bistro/12 septembre 2015 │ 18 │

│ Wikipedia:In the news/Candidates │ 17 │

│ Wikipedia:Requests for page protection │ 17 │

└──────────────────────────────────────────────────────────┴───────┘

Retrieved 10 rows in 0.20s.3. Using JDBC Driver to Query Druid

If you would like to use a JDBC driver for the same, it can be done by using Avatica JDBC driver. You will have to download the jar of the driver and include it in the classpath. To view the details on the example, you can look into the “JDBC” section of Running SQL Queries.

In this post, we basically covered the ways we can query Druid for SQL. In the next part, We will focus our discussion on which kind of queries are supported in Druid and workload optimization. Thanks for Reading!

2 thoughts on “Running SQL on Apache Druid – Part I: How to run queries4 min read”

Comments are closed.