In this blog, I am going to cover up the leftovers of my last blog: “A Beginners Approach To KAFKA” in which I tried to explain the details of Kafka, like its terminologies, advantages and demonstrated like how to set up the Kafka environment and get our Single Broker Cluster up and then test it’s working.

So the main thing that I am going to cover up here is How Can We Set Up A Multi-Broker Cluster? To cover up this topic I would be taking help from my previous blog and the Single-Broker Cluster that I built there (I will be UPGRADING the same cluster system to a Multi-Broker cluster system here), so I am providing here the link for the same, one can understand the concepts and setup structure from there.

so let’s get started with,

Setting Up A Multi-Broker Cluster:

For Kafka, a Single-Broker is nothing but just a cluster of size 1. so let’s expand our cluster to 3 nodes for now. To expand our cluster I would need a single broker cluster and its config-server.properties(already done in the previous blog). We can get it from there.

The server.properties file can be found in the config folder inside the Kafka directory.

kafka_2.11-0.10.2.0/config/server.properties

This configuration is of our First node that we created in our single-broker cluster. Now for the other two nodes let’s just start by copying the above server.properties file into server-1.properties and server-2.properties and save them into the same config folder. This can be done by issuing the following commands under Kafka directory in terminal:

cp config/server.properties config/server-1.properties

cp config/server.properties config/server-2.properties

If you open the server.properties file you’ll find that it contains:

1. A broker Id field.

# The id of the broker. This must be set to a unique integer for each broker.

broker.id=0

2. A Listeners field

# FORMAT:

# listeners = listener_name://host_name:port

# EXAMPLE:

# listeners = PLAINTEXT://your.host.name:9092

3. A log.dir field

############################# Log Basics #############################

# A comma seperated list of directories under which to store log files

log.dirs=/tmp/kafka-logs

So the same fields would have been generated in our server1.properties and server2.properties files as these were the exact server.properties file’s contents, now for our Multi-Broker System to work, we would need to change these fields in our server1.properties and server2.properties files.

Edit the server-1.properties file for the above-mentioned fields by adding the following new values:

broker.id=1

listeners=PLAINTEXT://:9093

log.dir=/tmp/kafka-logs-1

Edit the server-2.properties file for the above-mentioned fields by adding the following new values:

broker.id=2

listeners=PLAINTEXT://:9094

log.dir=/tmp/kafka-logs-2

As soon as we update our new broker’s properties we can start them.

To start our server1 and server2, first, start the server we build in single broker system and also check that the zookeeper is up.

To start the first server type:

bin/kafka-server-start.sh config/server.properties

To start the second server type:

bin/kafka-server-start.sh config/server-1.properties &

To start the third server type:

bin/kafka-server-start.sh config/server-2.properties &

After running the above commands all the 3 nodes should be up. Next, we need to create a topic with a replication factor value set to 3, this can be done by:

bin/kafka-topics.sh –create –zookeeper localhost:2181 –replication-factor 3 –partitions 1 –topic MultiBrokerTopic

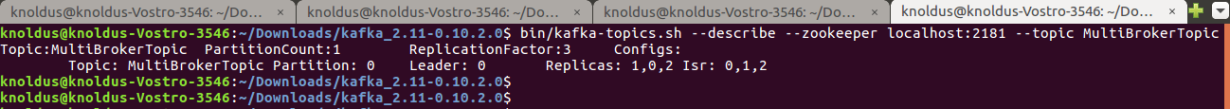

Now that we have set up our cluster, we still are unaware of which broker is doing what? To know about that we have a command called “describe“. We can run the describe topics command to see which broker is doing what:

bin/kafka-topics.sh –describe –zookeeper localhost:2181 –topic MultiBrokerTopic

we will see something like this:

so what we got is:

1)The first line gives a summary of all the partitions.

Topic:MultiBrokerTopic PartitionCount:1 ReplicationFactor:3 Configs:

2)Each additional line gives information about one partition. Since we have only one partition for this topic there is only one line.

Topic: MultiBrokerTopic Partition: 0 Leader: 0 Replicas: 1,0,2 Isr: 0,1,2

A leader is the node responsible for all reads and writes to the given partition. Each node will be the leader for a randomly selected portion of the partitions and since we have just one partition Node0(here) is the leader for that only partition.

Replicas is the list of nodes that replicate the log for this partition regardless of whether they are the leader or even if they are currently alive.

Isr is the set of “in-sync” replicas. This is the subset of the replicas list that is currently alive and caught-up to the leader.

Publishing Messages to our new MultiBrokerTopic:

Let’s Start our producer:

bin/kafka-console-producer.sh –broker-list localhost:9092 –topic MultiBrokerTopic

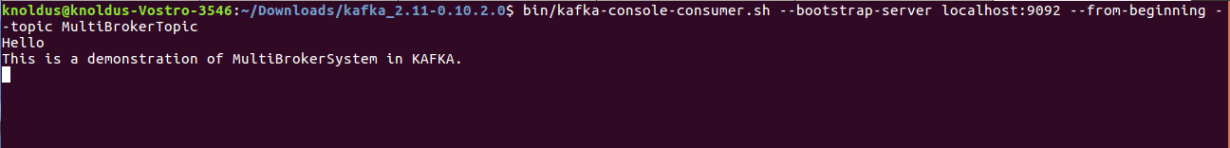

And then our consumer:

bin/kafka-console-consumer.sh –bootstrap-server localhost:9092 –from-beginning –topic MultiBrokerTopic

Now let’s play with our system a bit like we have seen that broker0 is acting as a leader in our system, what if it goes down or it fails? Let’s try it out and see what happens next does system halts or a fault tolerance scheme is there that the system would apply.

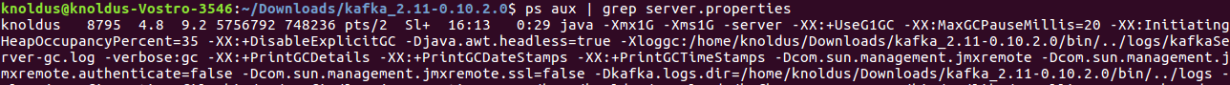

Killing the Leader(broker0 here):

psaux |grepserver.properties

we can see that broker0 has id 8795 to kill it just type:

kill -9 8795

Now let’s again check the details of the nodes:

bin/kafka-topics.sh –describe –zookeeper localhost:2181 –topic MultiBrokerTopic

we should see something like this:

As we can see that when we killed our Leader(Broker0 here), the Leader node got changed to Broker1 (could have been Broker2 too), also we can see that node0 is no more in the Isr List as well. That’s the kind of Fault Tolerance that we are provided with when running a Multi-Broker Cluster ie; In the case of a Leader Failure other nodes would come up to take up its place and Manage the system to still work normally having no effect of Leader Node Failure. All the Messages will still be available to be consumed even though the leader(Broker0 here) that originally took the writes got down, that’s the beauty of a KAFKA-MULTI-BROKER-SYSTEM.

So that’s all with a Multi-Broker Cluster System. In my next blog, I’ll probably be discussing programming techniques in Kafka, and we’ll see a simple Scala Consumer Producer example.

Till then Happy Reading 🙂 🙂

References:

- https://kafka.apache.org/

- https://www.tutorialspoint.com/apache_kafka

- https://devops.profitbricks.com/tutorials/install-and-configure-apache-kafka-on-ubuntu-1604-1/

Hi Prashant. Thanks for your article. Please can you help me on how can I kill the broker 0 (like above) using embedded kafka and through Java method? I’m looking to write a java method to kill specific broker instance in embedded kafka. Thanks in advance.