The demand for stream processing is increasing a lot these days. The reason is that often processing big volumes of data is not enough.

Data has to be processed fast, so that a firm can react to changing business conditions in real time.

Stream processing is the real-time processing of data continuously and concurrently.

Streaming processing” is the ideal platform to process data streams or sensor data (usually a high ratio of event throughput versus numbers of queries), whereas “complex event processing” (CEP) utilizes event-by-event processing and aggregation (e.g. on potentially out-of-order events from a variety of sources – often with large numbers of rules or business logic).

And we have many options also to do real time processing over data i.e spark, kafka stream, flink, storm etc.

But in this blog, i am going to discuss difference between Apache Spark and Kafka Stream.

Apache Spark is a general framework for large-scale data processing that supports lots of different programming languages and concepts such as MapReduce, in-memory processing, stream processing, graph processing or machine learning. This can also be used on top of Hadoop. Data can be ingested from many sources like Kafka, Flume, Kinesis, or TCP sockets, and can be processed using complex algorithms expressed with high-level functions like map, reduce, join and window.

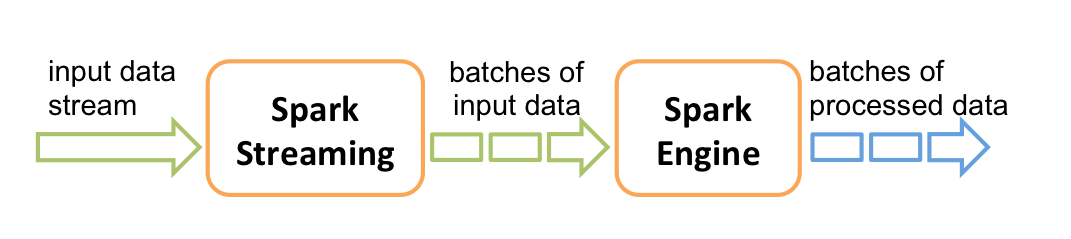

Internally, it works as follows. Spark Streaming receives live input data streams and divides the data into batches, which are then processed by the Spark engine to generate the final stream of results in batches.

Spark Streaming provides a high-level abstraction called discretized stream or DStream, which represents a continuous stream of data. DStreams can be created either from input data streams from sources such as Kafka, Flume, and Kinesis, or by applying high-level operations on other DStreams. Internally, a DStream is represented as a sequence of RDDs.

Kafka Stream:

Kafka Streams is a client library for processing and analyzing data stored in Kafka and either write the resulting data back to Kafka or send the final output to an external system. It builds upon important stream processing concepts such as properly distinguishing between event time and processing time, windowing support, and simple yet efficient management of application state. It is based on many concepts already contained in Kafka, such as scaling by partitioning the topics. Also for this reason it comes as a lightweight library, which can be integrated into an application. The application can then be operated as desired: standalone, in an application server, as docker container or via a resource manager such as mesos.

Kafka Streams directly addresses a lot of the difficult problems in stream processing:

- Event-at-a-time processing (not microbatch) with millisecond latency.

- Stateful processing including distributed joins and aggregations.

- A convenient DSL.

- Windowing with out-of-order data using a DataFlow-like model.

- Distributed processing and fault-tolerance with fast failover.

- No-downtime rolling deployments.

Apache spark can be used with kafka to stream the data but if you are deploying a Spark cluster for the sole purpose of this new application, that is definitely a big complexity hit.

So to overcome the complexity,we can use full-fledged stream processing framework and then kafka streams comes into picture with the following goal.

The goal is to simplify stream processing enough to make it accessible as a mainstream application programming model for asynchronous services. It’s the first library that I know, that FULLY utilises Kafka for more than being a message broker.

Streams is built on the concept of KTables and KStreams, which helps them to provide event time processing.

Giving a processing model that is fully integrated with the core abstractions Kafka provides to reduce the total number of moving pieces in a stream architecture.

Fully integrating the idea of tables of state with streams of events and making both of these available in a single conceptual framework.

Making Kafka Streams a fully embedded library with no stream processing cluster—just Kafka and your application.

It also balances the processing loads as new instances of your app are added or existing ones crash. And maintains local state for tables and helps in recovering from failure.

So What to use ?

The low latency and an easy to use event time support also apply to Kafka streams. It is a rather focused library, and it’s very well suited for certain types of tasks; that’s also why some of its design can be so optimized for how Kafka works. You don’t need to set up any kind of special Kafka Streams cluster and there is no cluster manager. And If you need to do a simple Kafka topic-to-topic transformation, count elements by key, enrich a stream with data from another topic, or run an aggregation or only real-time processing. Kafka Streams is for you.

If event time is not relevant and latencies in the seconds range are acceptable, Spark is the first choice. It is stable and almost any type of system can be easily integrated. In addition it comes with every Hadoop distribution. Furthermore the code used for batch applications can also be used for the streaming applications as the API is the same.

Conclusion:

I believe that Kafka Streams is still best used in a ‘Kafka -> Kafka’ context, while Spark Streaming could be used for a ‘Kafka -> Database’ or ‘Kafka -> Data science model’ type of context.

Hope that this blog is helpful for you. 🙂

References:

- https://kafka.apache.org/documentation/streams

- https://spark.apache.org/docs/latest/streaming-programming-guide.html

it is very useful information.

Reblogged this on Mahesh's Programming Blog and commented:

Spark Streaming vs Kafka Stream