In this blog we are going to understand and what is Apache airflow ,workflow of Airflow, uses of Airflow and how we can install Airflow.

So let’s get started..

What is Apache Airflow

Airflow is a workflow platform that allows you to define, execute, and monitor workflows. A workflow can be defined as any series of steps you take to accomplish a given goal consequently it can perform thousands of different activities each day, allowing for more efficient workflow management.

Uses of Airflow

We can use Apache Airflow to help coordinate our function workflows. Airflow acts as our orchestration engine with each state in the workflow performing a specific task as its own standalone function.

Apache Airflow can be use to install many different types of workflows, from managing a pipeline of web crawling and data processing tasks to orchestrating the deployment of software packages or updates across many servers. It provides an easy way to define how your processes should run to get them done faster and more than ever before.

What kind’s of problems we can solved using Apache Airflow?

- Command-line utility creating and maintaining a relationship between tasks is a nightmare, whereas, in Airflow, it is as simple as writing Python code.

- command-line utility needs external support to log, track, and manage tasks. Airflow UI to track and monitor the workflow execution

- command-line utility jobs are not reproducible unless externally configured. The Airflow keeps an audit trail of all tasks executed.

- Scalable

What are the best features Apache Airflow have

- Can handle upstream/downstream dependencies gracefully (Example: upstream missing tables).

- Easy to reprocess historical jobs by date, or re-run for specific intervals.

- Jobs can pass parameters to other jobs downstream.

- Monitoring all jobs status in real time + Email alerts.

- Integrations with a lot of infrastructure (Hive, Presto, Druid, AWS, Google cloud, etc).

- Handle errors and failures gracefully. Automatically retry when a task fails.

- Data sensors to trigger a DAG when data arrives.

- Implement trigger rules for task.

- You can download Airflow and start using it instantly and you can work with peers in the community.

Workflows of Apache airflow

A workflow is a sequence of tasks started on a schedule or triggered by an event and frequently used to handle big data processing pipelines.

This is the example of a typical workflow…

- First task is you need to download data from source.

- Then you need to send data somewhere to process

- In the middle you need to monitor how much the process is running.

- after process is completed you need to generate the report.

- and finally you need to send the report by email.

Let’s start with the installation of Apache Airflow

First you need to install python 3 on your system..

Update and Refresh Repository Lists

sudo apt update

Install Supporting Software

sudo apt install software-properties-commonAdd Deadsnakes PPA

sudo add-apt-repository ppa:deadsnakes/ppaInstall Python 3

sudo apt install python3.8Once you will follow all the steps you will be able to install python 3.8 on your system…

Please make sure when you are installing airflow then your system must have python 3 package not python 2 package.

Step -1 : First you need to installed “python-pip” on your system.

sudo apt-get install python3-pipStep -2 : Airflow needs a home on your local system. By default ~/airflow is the default location but you can change it as per your requirement.

export AIRFLOW_HOME=~/airflowStep – 3 : Now, install the Apache airflow using the pip with the following command.

pip3 install apache-airflowOnce you follows all the steps then you will be able to install Apache Airflow on you system and you will get a message

Step-4 : Apache Airflow requires a database to constantly run in the background:

airflow initdb

Step – 5 : To start the web-server run the following command in the terminal. The default port is 8080 and if you are using that port for something else then you can change it.

airflow webserver -p 8080Step 6 : Now we have to start the Apache Airflow scheduler:

airflow scheduler

Step 7 : Now let’s access the Airflow Dashboard go on web browser and run local host.

http://localhost:8080/home

If you see the above image when accessing the localhost (8080) that means Airflow has been successfully installed on your system.

That’s it for this blog folks in the next blog we will learn about Airflow DAG, Thanks!

References

https://httpd.apache.org/docs/

https://www.qubole.com/tech-blog/how-to-install-apache-airflow-to-run-different-executors/

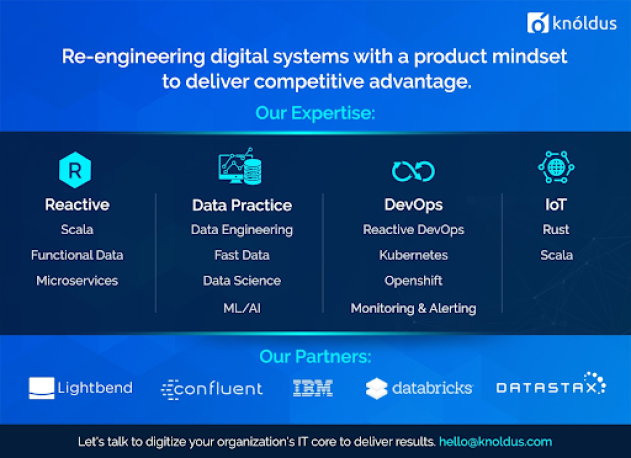

To read more tech blogs, visit Knoldus Blogs.