DC/OS provides a number of tools out-of-the-box, ranging from basic network connectivity between containers to more advanced features, such as load balancing and service discovery. In this blog, we’ll check the mechanisms through which DC/OS provides networking in version 1.12.

What is Service Discovery?

Service discovery is how your applications and services find each other. Given the name of a service, service discovery tells you where that service is: on what IP/port pairs are its instances running? Service discovery is an essential component of multi-service applications because it allows services to refer to each other by name, independent of where they’re deployed. Service discovery is doubly critical in scheduled environments like DC/OS because service instances can be rescheduled, added, or removed at any point, so where a service is constantly changing.

Fortunately for systems running in DC/OS, Marathon itself can act as a source of service discovery information, eliminating much of the need to run one of these separate systems—at least, if you have a good way of connecting your application to Marathon.

What is load balancer?

A load balancer is a device that distributes network or application traffic across a cluster of servers. Load balancing improves responsiveness and increases the availability of applications.

A load balancer sits between the client and the server farm accepting incoming network and application traffic and distributing the traffic across multiple backend servers using various methods. By balancing application requests across multiple servers, a load balancer reduces individual server load and prevents application server from becoming a single point of failure, thus improving overall application availability and responsiveness.

Now, let’s see the various mechanisms through which the DCOS provides networking services to the users. The DC/OS network stack provides:

- IP connectivity to containers

- Built-in DNS-based service discovery

- Layer 4 and layer 7 load balancing

IP Connectivity To Containers

A container running on DC/OS can obtain an IP address using one of the three networking modes, regardless of the container runtime (UCR or Docker) used to launch them. These are:

Host mode networking

In host mode, the containers run on the same network as the other DC/OS system services such as Mesos and Marathon. They share the same Linux network namespace and see the same IP address and ports seen by DC/OS system services. Host mode networking is the most restrictive; it does not allow the containers to use the entire TCP/UDP port range, and the applications have to be able to use whatever ports are available on an agent.

Bridge mode networking

In bridge mode, the containers are launched on a Linux bridge, created within the DC/OS agent. Containers running in this mode get their own Linux network namespace and IP address; they are able to use the entire TCP/UDP port range. This mode is very useful when the application port is fixed. The main issue with using this mode is that the containers are accessible only through port-mapping rules to the containers running on another agent. Both UCR and Docker install port-mapping rules for any container that is launched on bridge mode networking.

Container mode networking

A container running on DC/OS can obtain an IP address using Container mode networking, regardless of the container runtime (UCR or Docker) used to launch them, to be attached to different types of IP networks. However, the two container runtime engines differ in the way IP-per-container support is implemented. The UCR uses the network/cni isolator to implement the Container Network Interface (CNI) to provide networking support for Mesos containers, while the DockerContainerizer relies on the Docker daemon to provide networking support using Docker’s Container Network Model.

Of the three modes, this is the most flexible and feature-rich since the containers get their own Linux network namespace and connectivity between containers is guaranteed by the underlying SDN network without the need to rely on port-mapping rules on the agent. Further, since SDNs can provide network isolation through firewalls, and are very flexible, it makes it easy for the operator to run multi-tenant clusters. This networking mode also allows the container’s network to be completely isolated from the host network, thus giving an extra level of security to the host-network by protecting it from DDOS attacks from malicious containers running on top of DC/OS.

DNS-Based Service Discovery

DC/OS includes highly available, distributed, DNS-based service discovery. DNS is an integral part of DCOS. Since tasks move around frequently in DCOS and resources must be dynamically resolved, typically by some application protocol, they are referred to by name. Rather than implementing a Zookeeper, or Mesos client in every project, we’ve chosen DNS as the lingua franca for discovery amongst all of our components in DCOS.

The service discovery mechanism in DC/OS contains these components:

- A centralized component called Mesos DNS, which runs on every master.

- A distributed component called,

dcos-dns, that runs as an application within an Erlang VM calleddcos-net. The Erlang VMdcos-netruns on every node (agents and masters) in the cluster.

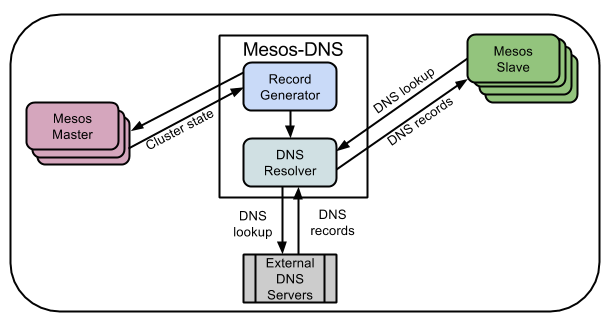

Mesos DNS

Mesos-DNS provides service discovery within DC/OS clusters. It is fully integrated into DC/OS and allows applications and services on the cluster to find each other through the domain name system (DNS), similar to how services discover each other throughout the Internet. Mesos-DNS follows RFC 1123 for name formatting.

Mesos-DNS provides service discovery within DC/OS clusters. It is fully integrated into DC/OS and allows applications and services on the cluster to find each other through the domain name system (DNS), similar to how services discover each other throughout the Internet. Mesos-DNS follows RFC 1123 for name formatting.

Mesos-DNS is designed for reliability and simplicity. It requires little configuration and is automatically pointed to the DC/OS master nodes at launch. Mesos-DNS periodically queries the masters (every 30 seconds by default) to retrieve the state of all running tasks from all running services, and to generate A and SRV DNS records for these tasks. As tasks start, finish, fail, or restart on the DC/OS cluster, Mesos-DNS updates the DNS records to reflect the latest state.

If the Mesos-DNS process fails, systemd automatically restarts it. Mesos-DNS then retrieves the latest state from the DC/OS masters and begins serving DNS requests without additional coordination. Mesos-DNS does not require consensus mechanisms, persistent storage, or a replicated log because it does not implement heartbeats, health monitoring, or lifetime management for applications; this functionality is already built in to the DC/OS masters, agents, and services.

A Records

An A record associates a hostname to an IP address. When a task is launched by a DC/OS service, Mesos-DNS generates an A record for a hostname in the format <task>.<service>.mesos that provides one of the following:

- The IP address of the agent node that is running the task.

- The IP address of the task’s network container (provided by a Mesos containerizer).

For example, other DC/OS tasks can discover the IP address for a service named my-search launched by the marathon service with a lookup for :

my-search.marathon.mesos

SRV Records

An SRV record specifies the hostname and port of a service. For example, other Mesos tasks can discover a task named my-search launched by the marathon service with a query for

_my-search._tcp.marathon.mesos

DCOS DNS

dcos-dns is a distributed DNS server that runs on each agent as well as the master, as part of an Erlang VM called dcos-net. This makes it highly available. The instance that is running on the leading master periodically polls the leading master state and generates FQDNs for every application launched by DC/OS. It then sends this information to its peers in the cluster. All these FQDNs have a TLD of .directory. For example, let us say there is service called my-service, for it the corresponding DNS would be:

my-service.marathon.autoip.dcos.thisdcos.directory:$MY_PORT

dcos-dns intercepts all DNS queries originating within an agent. If the query ends with .directory TLD then it is resolved locally; if it ends with .mesos then dcos-dns forwards the query to one of the mesos-dns running on the masters. Otherwise, it forwards the query to the configured upstream DNS server based on the TLD.

Load Balancing

dcos-l4lb

DC/OS provides an east-west load balancer (Minuteman) that enables multi-tier microservices architectures. It acts as a TCP layer 4 load balancer and leverages load-balancing features within the Linux kernel to achieve near line-rate throughputs and latency. The features include:

- Distributed load balancing of applications.

- Facilitates east-west communication within the cluster.

- User specifies an FQDN address to the DC/OS service.

- Respects health checks.

- Automatically allocates virtual IPs to service FQDN.

When you launch a set of tasks, DC/OS distributes them to a set of nodes in the cluster. The Minuteman instance running on each of the cluster agents coordinates the load balancing decisions. Minuteman on each agent programs the IPVS module within the Linux kernel with entries for all the tasks associated with a given service. This allows the Linux kernel to make load-balancing decisions at near line-rate speeds. Minuteman tracks the availability and reachability of these tasks and keeps the IPVS database up-to-date with all of the healthy backends, which means the Linux kernel can select a live backend for each request that it load balances.

There are some limitations on how we can use the Minuteman. These include :

- You must not firewall traffic between the nodes.

- You must not change

ip_local_port_range - You must run a stock kernel from RHEL 7.2+, or Ubuntu 14.04+ LTS.

- Requires the following kernel modules:

ip_vs,ip_vs_wlc,dummy - Requires iptable rule to masquerade connections to VIPs.

RULE="POSTROUTING -m ipvs --ipvs --vdir ORIGINAL --vmethod MASQ -m comment --comment Minuteman-IPVS-IPTables-masquerade-rule -j MASQUERADE" iptables --wait -t nat -I ${RULE} - To support intra-namespace bridge VIPs, bridge netfilter needs to be disabled.

- IPVS conntrack needs to be enabled.

- Port 61420 must be open for the load balancer to work correctly. Because the load balancer maintains a partial mesh, it needs to ensure that connectivity between nodes is unhindered.

VIP Addresses

DC/OS can map traffic from a single Virtual IP (VIP) to multiple IP addresses and ports. DC/OS VIPs are name-based, which means clients connect with a service address instead of an IP address. DC/OS automatically generates name-based VIPs that do not collide with IP VIPs. VIPs follow this naming convention:

<service-name>.marathon.l4lb.thisdcos.directory:<port>

We can also use the layer 4 load balancer by assigning a VIP in your app definition. After you create a task, or a set of tasks, with a VIP, they will automatically become available to all nodes in the cluster, including the masters. For example, Kafka automatically creates VIP when you install it. We can use this VIP to address any one of the Kafka brokers in the cluster. The name by which Kafka brokers will be accessible is:

broker.kafka.l4lb.thisdcos.directory:9092

Edge-LB

Edge-LB proxies and load balances traffic to all services that run on DC/OS. It acts as a layer 7 load balancer. Edge-LB provides both North-South (external to internal) load balancing, while the dcos-l4lb component provides East-West (internal to internal) load balancing.

Edge-LB leverages HAProxy, which provides the core load balancing and proxying features, such as load balancing for TCP and HTTP-based applications, SSL support, and health checking. In addition, Edge-LB provides first class support for zero downtime service deployment strategies, such as blue/green deployment. Edge-LB subscribes to Mesos and updates HAProxy configuration in real time.

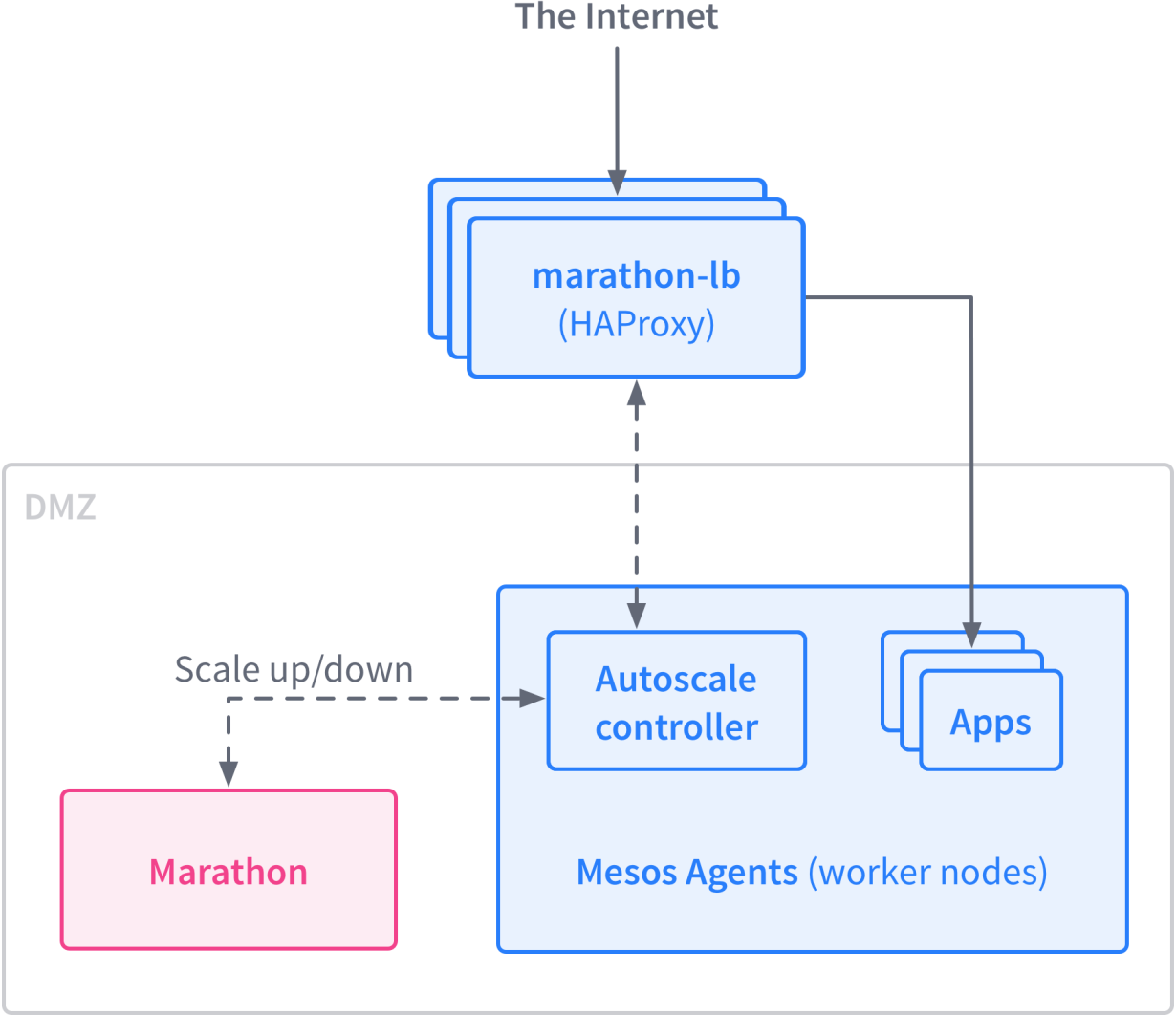

Marathon-LB

Marathon-LB is based on HAProxy, a rapid proxy and load balancer. It can act as both layer 4 and layer 7 load balancer. HAProxy provides proxying and load balancing for TCP and HTTP based applications, with features such as SSL support, HTTP compression, health checking, Lua scripting and more. Marathon-LB subscribes to Marathon’s event bus and updates the HAProxy configuration in real time.

References:

- Documentation: Mesosphere

- Marathon LB

- EdgeLB