Performance and Scalability are the two main factors that come into account when creating a Distributed System. When people talk about these two terminologies they very often use these two words synonymously. However, they mean different things. As there’s a lot of misunderstanding so in this blog we’ll discuss Performance and Scalability, their differences and relation if any.

Performance –

Performance is all about optimizing response time. In other words, It’s about improving how quickly a system can handle any single response. In simple words, it refers to the capability of a system to provide a certain response time. So performance is a software quality metric.

Here, we don’t care about the number of loads or requests we can handle at a time rather than we look at a single request and evaluates how much time it takes.

Measuring Performance –

Most specifically, we’ll discuss three basic measures of performance –

1. Response Time –

It’s just a direct measure of how much time a system takes to process a request. This factor is widely used to measure the performance.

2. Throughput –

Throughput is a number of requests an application may complete per unit of time. Generally, it ranges from 10/seconds to 1/hour depending upon the process.

3. System Availability –

Such type of indispensable metric, usually expressed as a percentage of application running time minus the time application can’t be accessed.

Scalability –

Scalability on the other hand is about optimizing our ability to handle the load. In other words, It’s about improving how many requests a system can handle at a time. Scalability refers to the characteristic of a system to increase performance by adding additional resources.

Here, we don’t care about a single request and time rather than we focus on the number of loads or requests we can handle at a time.

Measuring Scalability –

Just like performance, we are gonna discuss the three basic measures of scalability –

1. Throughput

2. Resource Usage – It defines the usage level of all resources being involved (CPU, memory, disk, bandwidth etc).

3. Cost – Most common factor while measuring the scalability, Cost which is price per transaction.

Performance & Scalability –

If you look at a measurement like requests-per-second you’ll see that requests-per-second actually measures both. That makes it a valuable metric because we can use it to see whether we have improved our performance or our scalability, but it also means that it is somewhat restrictive in the sense that while we can use it to see if we have improved either one if it improves we can’t tell which one changed.

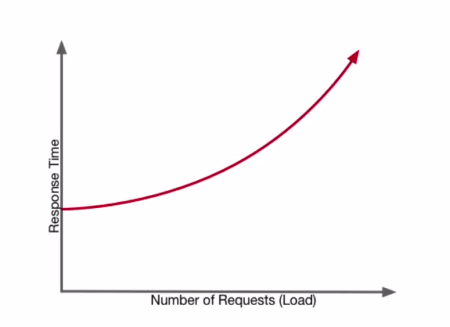

Let’s look into the graph describing the relationship

If you look into the graph, you’ll find that Increasing loads leads to increase in response time.

It is quite clear that theoretically scalability has no limit to it. When we build Reactive Microservices, we tend to focus on improving scalability because we know that we can push that forever. Now it doesn’t mean that we don’t also look at performance, but performance tends to be something that we focus on less. We tend to focus more on scalability because it has no theoretical limit.

References –

https://academy.lightbend.com/courses/course-v1:lightbend+LRA-BuildingScalableSystems+v1/about